RoNIN: Robust Neural Inertial Navigation

RoNIN: Robust Neural Inertial Navigation in the Wild: Benchmark, Evaluations, and New Methods

Hang Yan*, Sachini Herath*, Yasutaka Furukawa

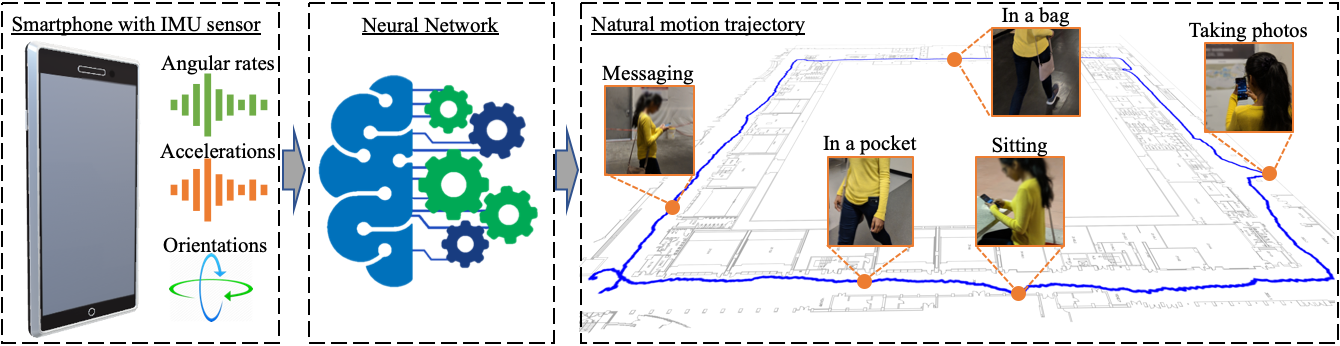

Abstract: This paper sets a new foundation for data-driven inertial navigation research, where the task is the estimation of positions and orientations of a moving subject from a sequence of IMU sensor measurements. More concretely, the paper presents 1) a new benchmark containing more than 40 hours of IMU sensor data from 100 human subjects with ground-truth 3D trajectories under natural human motions; 2) novel neural inertial navigation architectures, making significant improvements for challenging motion cases; and 3) qualitative and quantitative evaluations of the competing methods over three inertial navigation benchmarks. We will share the code and data to promote further research.

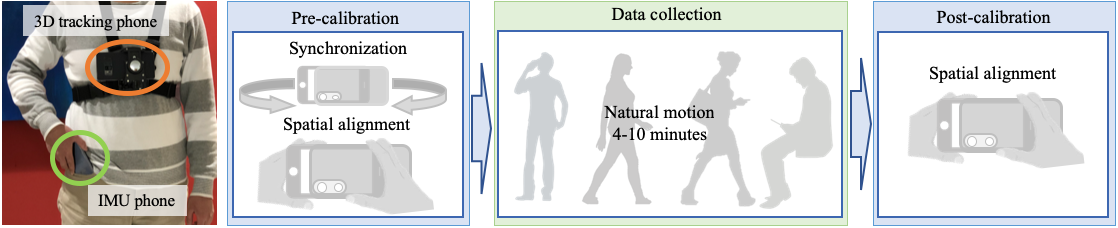

RoNIN is the largest inertial navigation database consisting of more than 42.7 hours of IMU and ground-truth 3D motion data from 100 human subjects. We have developed a two-device data acquisition protocol, where we use a harness to attach a 3D tracking phone to a body and let subjects handle the other phone freely for IMU data collection.

This protocol exhibits two important changes to the nature of motion learning tasks

- The positional ground-truth is obtained only for the 3D tracking phone attached to a harness, and our task is to estimate the trajectory of a body instead of the IMU phone.

- The data offers a new task of body heading estimation: a more challenging task because the body orientation differs from the device orientation arbitrarily depending on how one carries a phone

The data and pre-trained models can be used only for research purposes.

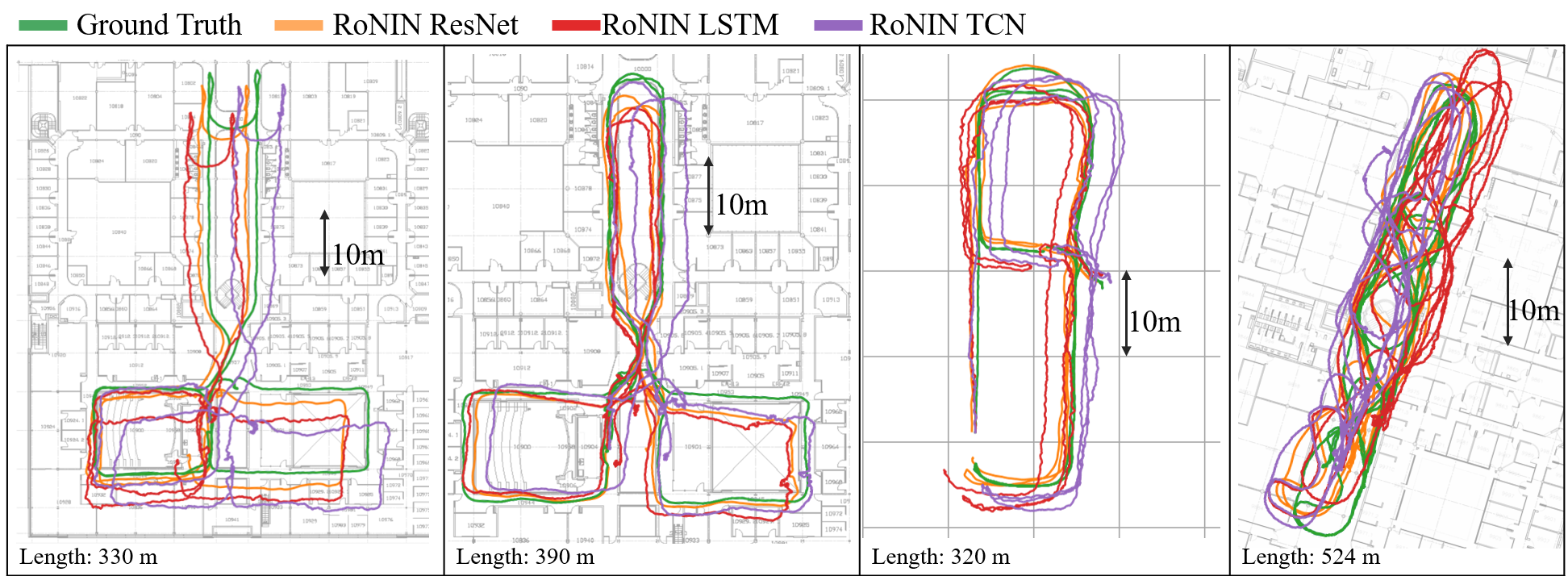

| TestSubject | Metric | NDR | PDR | RIDI | IONet | RoNIN | |||

|---|---|---|---|---|---|---|---|---|---|

| ResNet | LSTM | TCN | |||||||

| RIDI Dataset | Seen | ATE | 31.06 | 3.52 | 1.88 | 11.46 | 1.63 | 2.00 | 1.66 |

| RTE | 37.53 | 4.56 | 2.38 | 14.22 | 1.91 | 2.64 |

2.16 | ||

| Unseen | ATE | 32.01 | 1.94 | 1.71 | 12.50 | 1.67 | 2.08 | 1.66 | |

| RTE | 38.04 | 1.81 | 1.79 | 13.38 | 1.62 | 2.10 | 2.26 | ||

| OXIOD Dataset | Seen | ATE | 716.31 | 2.12 | 4.12 | 1.79 | 2.40 | 2.02 | 2.26 |

| RTE | 606.75 | 2.11 | 3.45 | 1.97 | 1.77 | 2.33 | 2.63 | ||

| Unseen | ATE | 1941.41 | 3.26 | 4.50 | 2.63 | 6.71 | 7.12 | 7.76 | |

| RTE | 848.55 | 2.32 | 2.70 | 2.63 | 3.04 | 5.42 | 5.78 | ||

| RoNIN Dataset | Seen | ATE | 675.21 | 29.54 | 17.06 | 31.07 | 3.54 | 4.18 | 4.38 |

| RTE | 169.48 | 21.36 | 17.50 | 24.61 | 2.67 | 2.63 | 2.90 | ||

| Unseen | ATE | 458.06 | 27.67 | 15.66 | 32.03 | 5.14 | 5.32 | 5.70 | |

| RTE | 117.06 | 23.17 | 18.91 | 26.93 | 4.37 | 3.58 | 4.07 | ||

Position Results

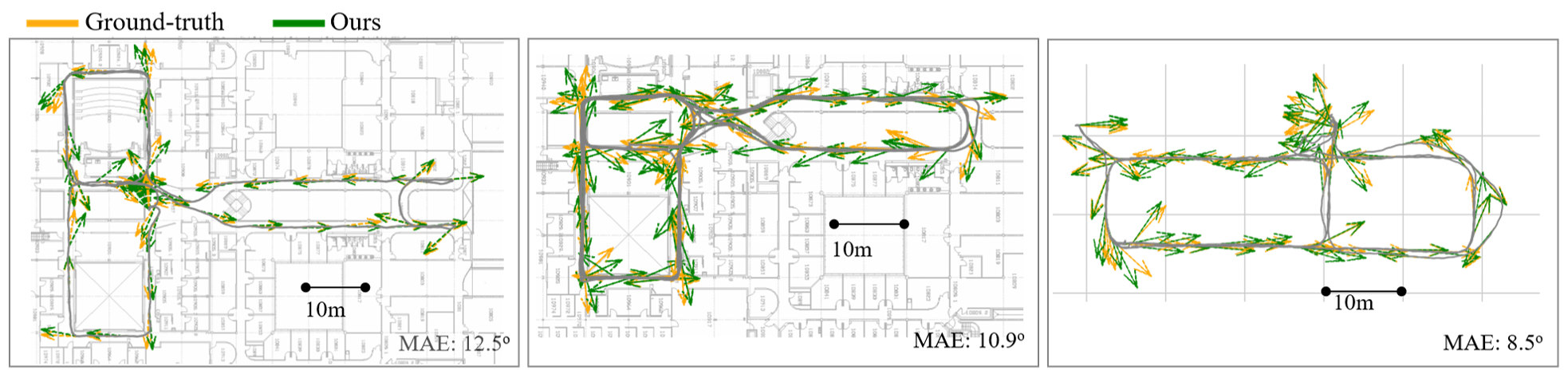

Heading Results